ChatGPT voice and vision announced – speaking, multi-modal input confirmed

Table of Contents

ChatGPT voice and vision are available now for OpenAI’s ChatGPT AI chatbot – the next evolution in the game-changing app. OpenAI’s new update enables ChatGPT to both speak and accept images as a form of input – potentially dramatically altering use cases and the development of third-party integrations with the tool. The new features are powered by OpenAI’s existing AI audio model Whisper, as well as new AI image model GPT-4V. The new voice model will allow voice conversations with ChatGPT on Android and iOS mobile devices, while the image capabilities include both image recognition features as well as image generation through DALL-E 3. The announcement came via co-founder Sam Altman on X (formerly Twitter), urging people to give the new additions a try. Users can find an overview and examples of uses for both ChatGPT voice mode and visual input via the OpenAI website.

ChatGPT voice overview – AI voice control

“So ChatGPT can speak – what’s the big deal?” said nobody. Because if you know some of the things AI can be used for, it makes sense that speaking exponentially changes the scope of use. Even more exciting, speech-to-text via ChatGPT Voice is now available for free to all users!

Prime Day is finally here! Find all the biggest tech and PC deals below.

- Sapphire 11348-03-20G Pulse AMD Radeon™ RX 9070 XT Was $779 Now $739

- AMD Ryzen 7 7800X3D 8-Core, 16-Thread Desktop Processor Was $449 Now $341

- ASUS RTX™ 5060 OC Edition Graphics Card Was $379 Now $339

- LG 77-Inch Class OLED evo AI 4K C5 Series Smart TV Was $3,696 Now $2,796

- Intel® Core™ i7-14700K New Gaming Desktop Was $320.99 Now $274

- Lexar 2TB NM1090 w/HeatSink SSD PCIe Gen5x4 NVMe M.2 Was $281.97 Now $214.98

- Apple Watch Series 10 GPS + Cellular 42mm case Smartwatch Was $499.99 Now $379.99

- ASUS ROG Strix G16 (2025) 16" FHD, RTX 5060 gaming laptop Was $1,499.99 Now $1,274.99

- Apple iPad mini (A17 Pro): Apple Intelligence Was $499.99 Now $379.99

*Prices and savings subject to change. Click through to get the current prices.

For now, it’s interesting to note that users will be able to “chat back and forth” with ChatGPT. This new voice interaction capability puts it ahead of alternative AI chatbots, and indeed Google Assistant, which of course can’t act on your voice commands by using plugins like ChatGPT can. Clearly, that could impact things like customer service, also ChatGPT’s ability to write (or tell) a story. OpenAI states that it has been working with professional voice actors to develop voice mode and that creating synthetic voices from a few seconds of real speech “opens doors to many creative and accessibility-focused applications”. Such suggested applications of human-like audio from the new text-to-speech model include voice translation, reading a bedtime story aloud, and AI-generated podcasts.

Yes, it also means potential risk, but the post states that ChatGPT voice will be developed to ensure it is safe and cannot be used maliciously. In fact, it is specifically being developed for use in voice chat to reduce risk.

How to use ChatGPT voice control

OpenAI confirmed on November 21st that “ChatGPT with voice is now available to all free users.” To use it, you can “Download the app on your phone and tap the headphones icon to start a conversation.”

Can ElevenLabs speech-to-speech compete?

OpenAI only recently filed to trademark the logo for its voice product. You can find the USPTO Trademark & Patent filing, dated October 5th, by searching for Openai Opco L L C Trademarks & Logos.

Essential AI Tools

OpenAI’s new visual AI model – GPT-4V

Speaking of safety and risk management, a post on the OpenAI research blog under “Safety & Alignment” discusses the controls necessary over such a powerful function.

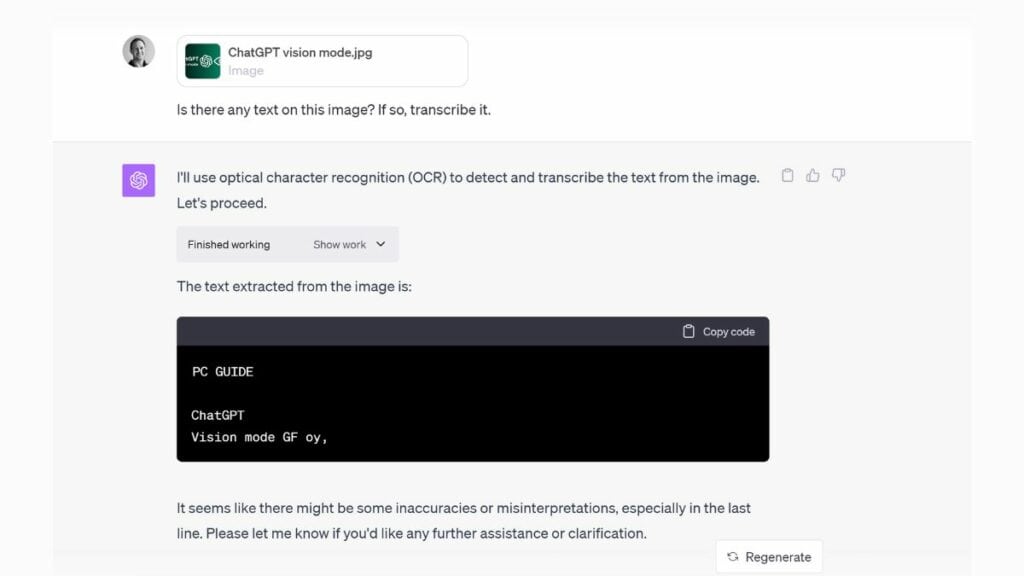

The new visual model named “GPT-4 with vision (GPT-4V) enables users to instruct GPT-4 to analyze image inputs provided by the user, and is the latest capability we are making broadly available. Incorporating additional modalities (such as image inputs) into large language models (LLMs) is viewed by some as a key frontier in artificial intelligence research and development. Multimodal LLMs offer the possibility of expanding the impact of language-only systems with novel interfaces and capabilities, enabling them to solve new tasks and provide novel experiences for their users. In this system card, we analyze the safety properties of GPT-4V. Our work on safety for GPT-4V builds on the work done for GPT-4 and here we dive deeper into the evaluations, preparation, and mitigation work done specifically for image inputs.”

ChatGPT vision overview – AI visual input

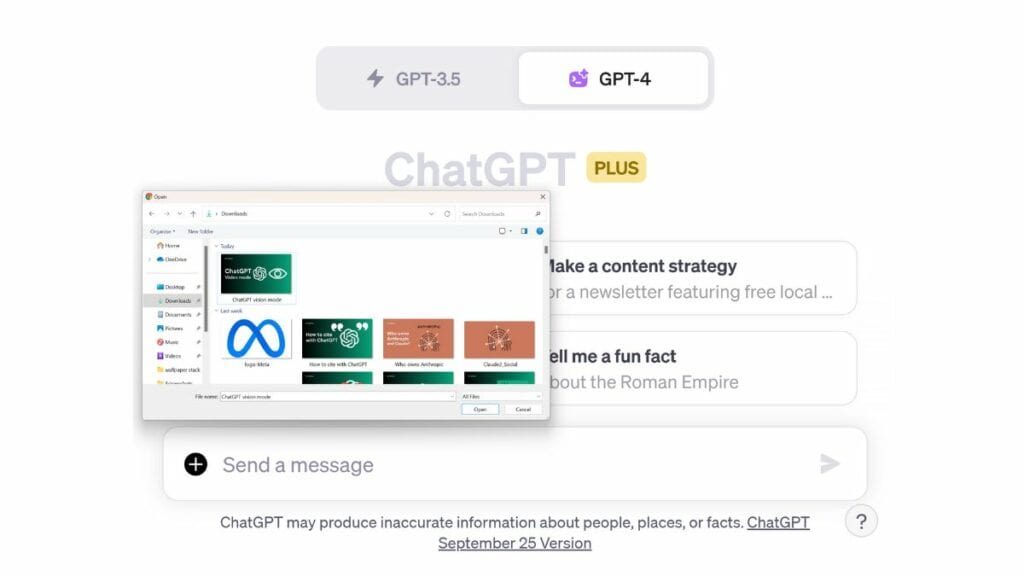

ChatGPT image upload was previously only possible via workarounds (using plugins and image links). This update makes it possible to upload images directly to the ChatGPT interface, without plugins, and prompt the AI chatbot with both an image file and text prompt at the same time. This practice is being called “visual prompting” and is the cutting-edge ability of multi-modal chat bots.

Similar to voice, Open AI states “Vision-based models also present new challenges, ranging from hallucinations about people to relying on the model's interpretation of images in high-stakes domains”. This includes working on risk in domains such as extremism and scientific proficiency – the goal again being responsible usage.

The initial example of ChatGPT vision in use is of a user uploading an image of a bike and asking for help lowering the seat. Such usefulness is clear, especially for day-to-day tasks. But OpenAI has also worked with the team at Be My Eyes, an app for blind and visually impaired people. This active focus on accessibility strengthens ChatGPT as the world’s default AI chatbot – a position now seemingly impossible to dethrone.

The company states such image analysis can help users to find functions on things like remote controls. But it can also potentially cause issues when analyzing images including people. As such, OpenAI has stated that it has worked to “significantly limit ChatGPT's ability to analyze and make direct statements about people.” Not only because ChatGPT isn’t always accurate, but because of individual privacy.

Steve's opinion

New ChatGPT voice and image input is quite a leap from pure text interaction. It comes with clear challenges, safety concerns, and clear opportunities too. Of course, the company will gather more data to help fine-tune desirable usage as users engage with the new tools. You can find further guidance on using ChatGPT vision and ChatGPT voice here on PC Guide!