What is Nvidia’s Blackwell AI? A gateway to the age of AI supercomputing

Table of Contents

Nvidia’s plans for a supercomputing generative AI platform are now clearer. Named ‘Blackwell' (after game theorist David Blackwell), it heralds a monumental leap in computing and introduces what will be the most advanced GPU architecture ever designed. So what is Blackwell AI, the platform Nvidia CEO Jensen Huang has said would usher in a $100 trillion accelerated computing industry with generative AI at its core?

Speaking to over 11,000 attendees at the Silicon Valley SAP Center arena at Nvidia’s week-long 2024 GTC conference, Huang said, “The future is generative… which is why this is a brand new industry. The way we compute is fundamentally different. We created a processor for the generative AI era.”

Prime Day may have closed its doors, but that hasn't stopped great deals from landing on the web's biggest online retailer. Here are all the best last chance savings from this year's Prime event.

- Sapphire 11348-03-20G Pulse AMD Radeon™ RX 9070 XT Was $779 Now $719

- AMD Ryzen 7 7800X3D 8-Core, 16-Thread Desktop Processor Was $449 Now $341

- Skytech King 95 Gaming PC Desktop, Ryzen 7 9800X3D 4.7 GHz Was $2,899 Now $2,599

- LG 77-Inch Class OLED evo AI 4K C5 Series Smart TV Was $3,696 Now $2,996

- AOC Laptop Computer 16GB RAM 512GB SSD Was $360.99 Now $306.84

- Lexar 2TB NM1090 w/HeatSink SSD PCIe Gen5x4 NVMe M.2 Was $281.97 Now $214.98

- Apple Watch Series 10 GPS + Cellular 42mm case Smartwatch Was $499.99 Now $379.99

- AMD Ryzen 9 5950X 16-core, 32-thread unlocked desktop processor Was $3199.99 Now $279.99

- Garmin vívoactive 5, Health and Fitness GPS Smartwatch Was $299.99 Now $190

*Prices and savings subject to change. Click through to get the current prices.

Blackwell AI Revealed

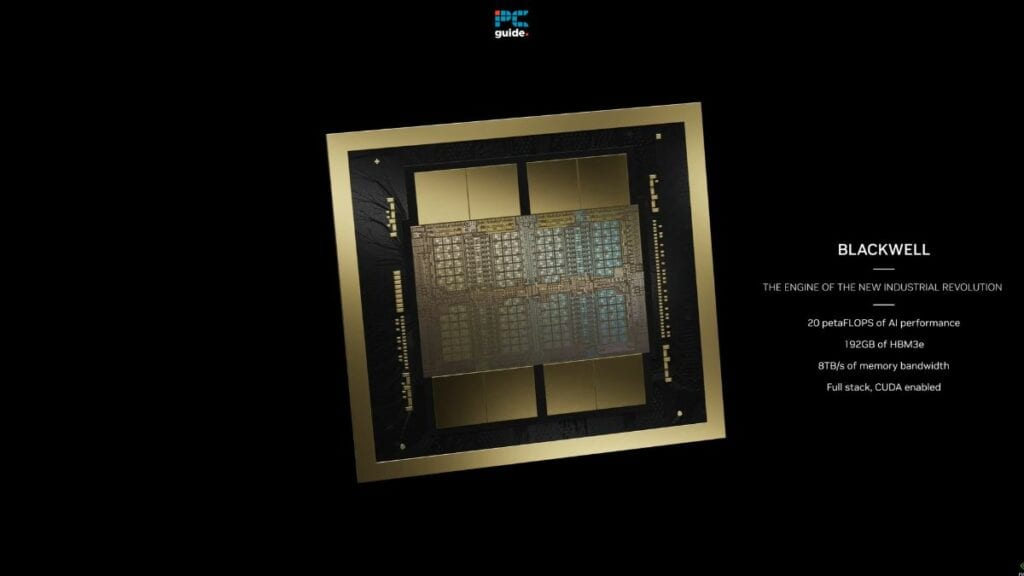

With 208 billion transistors, the Blackwell GPU is named after David Harold Blackwell, a University of California, Berkeley mathematician. Huang said that Blackwell’s GPU not only sets a new benchmark in processing power but ushers in a new era of generative AI, marking a decisive shift from traditional retrieval-based computing to a paradigm where content is dynamically generated in real-time.

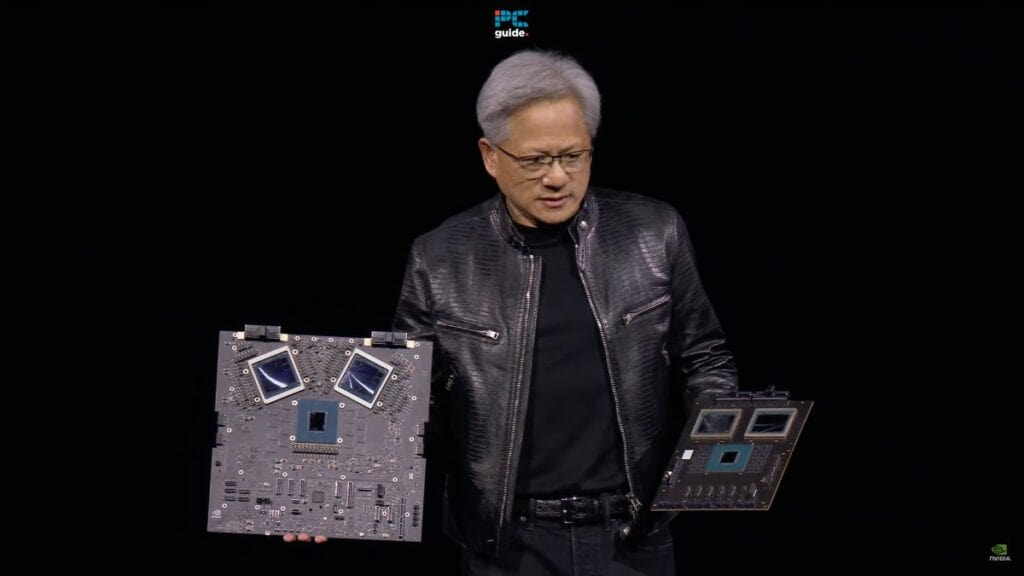

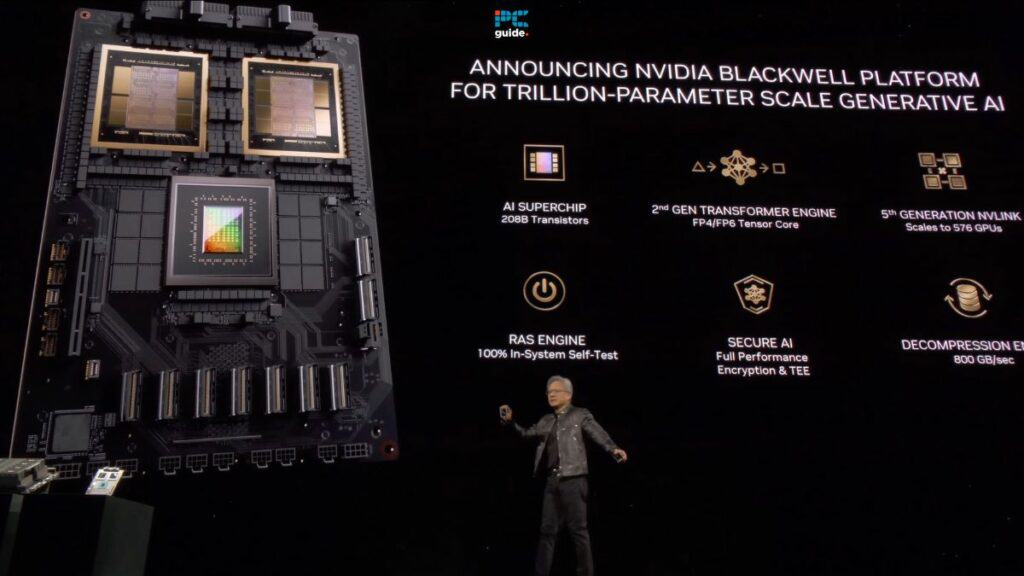

Huang was keen to prove that Blackwell is not just a GPU but a platform ecosystem with six new technologies on board, each optimized for the AI age.

Essential AI Tools

The main components of the Blackwell platform are:

- The World’s Most Powerful GPU: Containing 208 billion transistors, the Blackwell GPU connects two chip parts, with a super-fast link between the two for a cohesive architecture.

- Advanced Transformer Engine: Essentially, an AI engine designed to double Blackwell’s processing power. It allows the AI to handle tasks more efficiently.

- High-Speed NVLink: The NVLink allows up to 576 GPUs to talk to each other at extraordinary speeds.

- RAS Engine: Blackwell GPUs have a custom AI engine built-in to proactively check the system's health, predicting potential failures before they happen.

- Secure AI: Enhanced security features to protect and secure the system and its data.

- Efficient Decompression Engine: Designed to speed up how quickly the data can be processed, save GPU cycles, and reduce computational cost.

Blackwell AI – outpacing Moore's Law

Beyond Blackwell AI’s standout feature – its superior content token generation capability, which is five times that of its predecessor, Hopper – Huang revealed that Nvidia has achieved a staggering 1,000% advance in computational power between its PASCAL architecture (2016) and the arrival of the Blackwell platform today. This level of performance vastly outpaces the predictions enshrined in Moore’s Law.

How the AlexNet Cat Revealed Computing's New Age

At Blackwell’s uncovering, Jensen Huang revisited the foundational significance of the 2012 AlexNet breakthrough, a pivotal moment in generative AI. Huang described it as “first contact” with a new software development paradigm.

AlexNet showcased the potential of deep learning to recognize and generate complex images from simple inputs, such as creating complex and coherent images of a cat simply by typing in the letters “cat.”. A breakthrough in AI's ability to understand and create multimodal content, AlexNet was pivotal in the development of models like ChatGPT and Dall-E.

The profound capabilities of generative models lie in their ability to understand what a thing is and what it means in context. This understanding enables them to translate a concept from one form to another: text to image, image to video, brainwave to speech, and more. This fluid translation between forms of data is a hallmark feature of generative computing.

New Types of AI Software

In discussing a future shaped by generative AI (at the GTC conference), which Blackwell will undoubtedly help usher in, Huang showed off Nvidia’s project Earth 2. Earth 2 is an initiative employing generative AI to create high-resolution advanced weather prediction models. Discussing the benefits of AI in computational design, Huang said,

“Anything you can digitize, you can learn some patterns from it, and if we can understand its meaning, we might be able to generate it.” He then linked this idea to the potential of generating new content, drugs, manufacturing, and materials.

The $100 trillion AI Age is Here

At the same time, Huang introduced the Nvidia Inference Microservice (NIMS), a novel framework for deploying AI models. Huang referred to it as a “digital box” that allows the delivery and distribution of pre-trained AI models to developers and optimizes them for Nvidia’s ecosystem. Huang observed that it transformed Nvidia into a kind of “digital foundry” and introduced a new era of AI software.

Envisioning the future, Huang spoke of a transformative shift in software development, where assembling teams of specialized AIs under the oversight of a super AI would become the norm. This future envisages a landscape where a company’s data (for example, its PDFs) can be encoded into proprietary AI databases that employees can talk to and query, effectively taking a company’s stored knowledge and turning it into an accessible, conversational resource.

Blackwell AI – final thoughts

In conclusion, Huang said that generative AI and accelerated computing will form the backbone of a new $100 trillion industry built on the Blackwell platform. He reiterated that a GPU is no longer a chip architecture but a platform optimized for a new model of computing – one where content generation and contextual intelligence are at the heart of everything. The era of AI supercomputing has arrived, and Blackwell is our first glimpse of what it looks like!