Meta AI releases Emu Edit and Emu Video – Generative AI for social

Table of Contents

The new AI tools are an implementation of Meta’s own Emu foundational model announced at Meta Connect on October 16th, 2023. Emu Edit and Emu Video bring generative AI capabilities to social media apps Facebook and Instagram, in the form of text-to-video generation. So how good is CEO Mark Zuckerberg’s new AI-powered video tool?

What is Emu Edit?

Emu Edit allows you to create AI-generated images within Meta platforms social media apps Facebook and Instagram. Using simple text prompts, you can create any style of AI generated image, in the same way as alternative AI art generators Midjourney or Stable Diffusion. We’ve already seen an early iteration of this implementation in Meta’s AI stickers, released earlier this year.

Prime Day may have closed its doors, but that hasn't stopped great deals from landing on the web's biggest online retailer. Here are all the best last chance savings from this year's Prime event.

- Sapphire Pulse AMD Radeon™ RX 9070 XT Was $779 Now $719

- AMD Ryzen 7 7800X3D Processor Was $449 Now $341

- Skytech King 95 Ryzen 7 9800X3D gaming PC Was $2,899 Now $2,599

- LG 77-Inch Class OLED C5 TV Was $3,696 Now $2,996

- AOC Laptop Computer 16GB RAM 512GB SSD Was $360.99 Now $306.84

- Lexar 2TB NM1090 w/HeatSink SSD Was $281.97 Now $214.98

- Apple Watch Series 10 GPS+ Smartwatch Was $499.99 Now $379.99

- AMD Ryzen 9 5950X processor Was $3199.99 Now $279.99

- Garmin vívoactive 5 Smartwatch Was $299.99 Now $190

*Prices and savings subject to change. Click through to get the current prices.

The new AI image generator represents “a novel approach that aims to streamline various image manipulation tasks and bring enhanced capabilities and precision to image editing.”

Available now, it is “capable of free-form editing through instructions, encompassing tasks such as local and global editing, removing and adding a background, color and geometry transformations, detection and segmentation, and more.” This differs in philosophy from some current methods on the market, in how the text instructions are prioritized. Meta affirms that “the primary objective shouldn't just be about producing a “believable” image. Instead, the model should focus on precisely altering only the pixels relevant to the edit request. Unlike many generative AI models today, Emu Edit precisely follows instructions, ensuring that pixels in the input image unrelated to the instructions remain untouched. For instance, when adding the text “Aloha!” to a baseball cap, the cap itself should remain unchanged.”

The end result is superior performance, applying resources only where necessary. We expect Meta’s implementation to excel at computer vision tasks as a result.

Essential AI Tools

What is Emu Video?

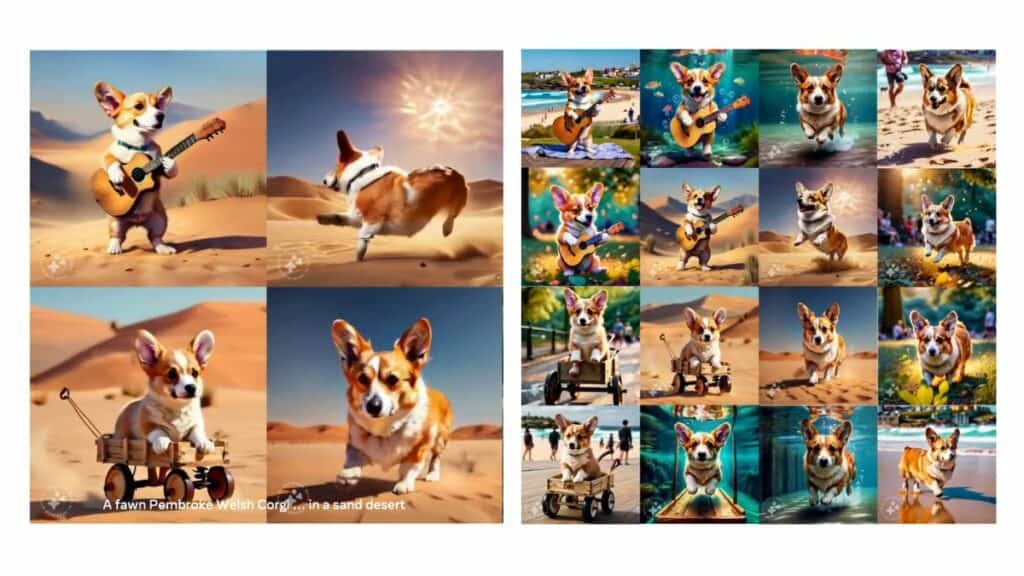

Emu Video allows you to animate AI images generated with Emu Edit. Meta’s AI video generator will produce high quality video from the input image. The resulting video quality will be 512×512 resolution at 16fps. The four-second long videos can be of any visual style you can describe, but will be limited to a visual quality similar to GIFs.

Is Meta’s AI-generated video any good?

16fps is a low frame rate, compared to the 24fps of most films you’ve ever seen, and 30fps of most social media video content. More frames do not objectively make a video better, but the 16fps output of Emu Video is visually similar to the GIF format with which we’re all familiar. It’s not great yet, to say nothing of the mediocre 512×512 (and therefore square) resolution. That said, it’s pretty good for the scale at which Meta is deploying it, and as the technology matures we can expect these aspects to improve throughout 2024.

Meta’s AI video generation model, which leverages the firms Emu model (the foundational model), Meta presents a novel approach to text-to-video generation based on diffusion models. The video generation process uses a “unified architecture for video generation tasks that can respond to a variety of inputs: text only, image only, and both text and image.”

There are two steps to create an AI-generated video with this new state-of-the-art vide tool: First, generate an image “conditioned on a text prompt”, and then generate a video “conditioned on both the text and the generated image. This “factorized” or split approach to video generation lets [Meta] train video generation models efficiently.” As a result, “factorized video generation can be implemented via a single diffusion model.” This leaves the big tech firm responsible for “critical design decisions, like adjusting noise schedules for video diffusion, and multi-stage training”, essentially all the highly technical stuff, while users need only provide a little creativity.