OpenAI DevDay AI developer conference — What’s new?

Table of Contents

OpenAI DevDay was held on November 6th, 2023 in San Francisco. This first-ever developer conference saw a host of new tools and capabilities announced for the firms world-famous AI chatbot, ChatGPT. The in-person event also invited ‘breakout sessions’ led by “members of OpenAI’s technical staff” for artificial intelligence developers looking for inspiration and immediate feedback. OpenAI CEO Sam Altman packed a lot into this one-day event, most notably OpenAI GPTs (custom models) and the new GPT-4 Turbo. With that and plenty more in store, here’s everything announced at OpenAI DevDay!

What’s new? — All announcements from OpenAI DevDay

World-leading artificial intelligence firm OpenAI held its first developer conference in San Francisco this week. A lot was covered in the one-day event, so let’s quickly break down every new announcement.

Prime Day may have closed its doors, but that hasn't stopped great deals from landing on the web's biggest online retailer. Here are all the best last chance savings from this year's Prime event.

- Sapphire Pulse AMD Radeon™ RX 9070 XT Was $779 Now $719

- AMD Ryzen 7 7800X3D Processor Was $449 Now $341

- Skytech King 95 Ryzen 7 9800X3D gaming PC Was $2,899 Now $2,599

- LG 77-Inch Class OLED C5 TV Was $3,696 Now $2,996

- AOC Laptop Computer 16GB RAM 512GB SSD Was $360.99 Now $306.84

- Lexar 2TB NM1090 w/HeatSink SSD Was $281.97 Now $214.98

- Apple Watch Series 10 GPS+ Smartwatch Was $499.99 Now $379.99

- AMD Ryzen 9 5950X processor Was $3199.99 Now $279.99

- Garmin vívoactive 5 Smartwatch Was $299.99 Now $190

*Prices and savings subject to change. Click through to get the current prices.

As a brief overview, we’ve got a new flagship AI model, which includes TTS capabilities. There’s also a GPT builder for custom AI models based on OpenAI foundation models, along with a soon-to-be store for AI agent commerce. Text generation models are now capable of “JSON mode and Reproducible outputs”. Developers will also benefit from a new Assistants API, which allows you to build “agent-like experiences on top of” OpenAI text-generation models.

Thank you for joining us on OpenAI DevDay, OpenAI's first developer conference. Recordings of the keynote and breakout sessions will be available one week after the event.

OpenAI

Recordings of the keynote and breakout sessions are now available.

GPT-4 Turbo with Vision

The latest AI model from OpenAI is GPT-4 Turbo. Previously, it was GPT-4V, also known as GPT-4 with vision or gpt-4-vision-preview. This previous model didn’t change the textual training data of the model, but added a new set of text-image pairs to enable computer vision i.e. image input. Computer vision allows a computer to process and understand images e.g. analyzing and classifying objects in the image. DALL·E 3 was also recently integrated for image output, but these are separate technologies.

GPT-4 Turbo has several benefits over GPT-4, including:

- One-third the cost of input

- One-half the cost of output

- 128k token context (Four times that of the best GPT-4 variant)

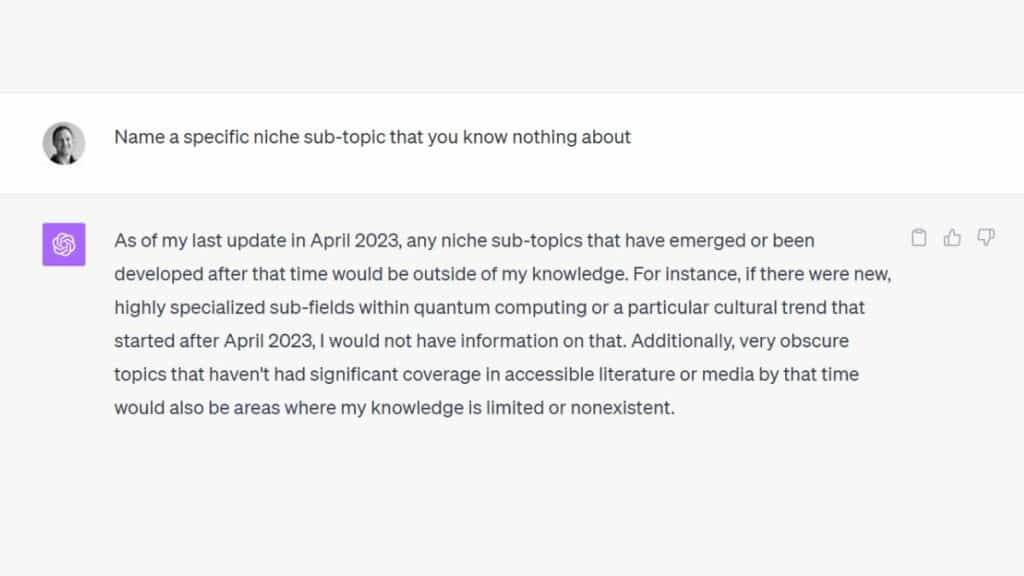

- Fresher training data (Includes knowledge up to April 2023).

It also supports our new JSON mode, which ensures the model will respond with valid JSON. The new API parameter response_format enables the model to constrain its output to generate a syntactically correct JSON object. JSON mode is useful for developers generating JSON in the Chat Completions API outside of function calling.

OpenAI DevDay announcement

This new model is not yet available via the ChatGPT interface, but OpenAI plans to “release the stable production-ready model in the coming weeks.”

Essential AI Tools

OpenAI GPTs

Also announced at the OpenAI developer conference, GPTs will be customised versions of the OpenAI foundation models. Imbued with your own training data, GPTs will be able to answer questions and problem solve with the context of anything you can imagine. As with the entirety of the OpenAI models platform, data you input is not used to train OpenAI models.

GPT, as in its pre-existing and non-proprietary context, stands for Generative Pre-trained Transformer.

DALL-E 3 API (Stylized DALL·E 3 API)

DALL·E 3 will come integrated into GPT-4 Turbo as standard, and the image generation model can now be used independently by third-party apps via the API. This is the model that was recently the AI tool of the viral “Disney Pixar AI movie poster” trend.

Assistants API, Code Interpreter, and Information Retrieval algorithms (IR)

The new Assistants API will make it “easier for developers to build their own assistive AI apps that have goals and can call models and tools”.

- Code Interpreter: Can write and run Python code in a “sandboxed execution environment”, as well as output graphs and charts, and even process files with diverse data and formatting. Assistants (or AI agents) are able to “run code iteratively to solve challenging code and math problems”.

- Retrieval: Add new data that doesn’t exist in a given OpenAI models training data, such as “proprietary domain data, product information or documents provided by your users”. This means you don't need to compute and store embeddings for your documents, or implement chunking and search algorithms. The Assistants API handles the retrieval process using methodologies already employed in ChatGPT.

- Function calling: Gives AI assistants the ability to “invoke functions you define and incorporate the function response in their messages”.

Copyright Shield — Copyright infringement protection by OpenAI

In an continued effort only bolstered by the recent AI Safety Summit at Bletchley Park, UK, OpenAI has introduced built-in copyright safeguards to their artificial intelligence systems. As of this week, a new kind of pro-consumer safeguard was named: Copyright Shield. In a strategic move to encourage confidence in the use of AI content generation, OpenAI promises to “defend our customers, and pay the costs incurred, if you face legal claims around copyright infringement. This applies to generally available features of ChatGPT Enterprise and our developer platform.”

Whisper v3 AI model and Consistency Decoder to be open-sourced

OpenAI are releasing Whisper large-v3, the firms most advanced open-source automatic speech recognition model (ASR), newly optimized for multilingual performance. This model is not yet available via the API, but Whisper v3 will likely be added by the end of 2023.

Consistency Decoder, an existing piece of software tech, is now also being open-sourced. It can function as a replacement for the Stable Diffusion VAE decoder. This decoder “improves all images compatible with the by Stable Diffusion 1.0+ VAE, with significant improvements in text, faces and straight lines”.