The AI Safety Summit being hosted at the UK’s Bletchley Park, London, kicks off on Monday, November 1st and spans two days. As the name suggests, AI safety is at the core of the event – as discussions focus on the risks and need for vigilance when it comes to the future of AI in industry, private, and public spheres. Prime Minister Rishi Sunak addresses Britain just two days after the AI Executive Order from US President Joe Biden ushered in similar changes overseas. But what will be covered and who’s attending? Here’s what you need to know.

As the UK is hosting this event – likely to help position itself at the forefront of discussions around the topic – it’s worth noting the venue: Bletchley Park is a 19th-century house and estate, famously ‘the home’ of British codebreakers during World War II. According to publications from the UK government, the Summit at Bletchley Park will cover broad AI topics while also considering ‘Frontier AI’. That is “highly capable general-purpose AI models that can perform a wide variety of tasks and match or exceed the capabilities present in today’s most advanced models.”

Is there an AI Safety Summit livestream?

No, there is no live stream for the AI Safety Summit. This is likely due to national security and/or logistical reasons. Accredited media organizations may have limited access, while official recordings may be released during or after the event by the UK government’s press departments.

Essential AI Tools

Content Guardian – AI Content Checker – One-click, Eight Checks

Jasper AI

WordAI

Copy.ai

Writesonic

AI Safety Summit attendees – Who’s there?

Governments

The UK government website notes the attendees as a mix of “international governments, leading AI companies, civil society groups, and experts”. Essentially, everyone you’d expect to be at an event of such potential importance. The UK has confirmed the attendance of 27 governments from around the world. The list includes five eyes allies Australia, Canada, New Zealand, and the USA, as well as governments from Europe, Asia, the Middle East, South America (Brazil), Central (Rwanda) and Eastern Africa (Kenya). China is in attendance, although the UK has made comments on that, and Ukraine but not Russia is on the published list of attendees. Other notable government official in attendance include:

- European Commission President Ursula von der Leyen

- United Nations Secretary-General Antonio Guterres

- Chinese Vice Minister of Science and Technology Wu Zhaohui

- Italian Prime Minister Giorgia Meloni

- German Chancellor Olaf Scholz

Institutes

The AI Safety Summit will also see 46 ‘academia and civil society’ institutions, including UK universities like Birmingham and Oxford (not Cambridge), Sanford University, and Berkman Center for Internet & Society at Harvard University (but not MIT). Also in attendance will be representatives from China’s Academy of Sciences, the Ada Lovelace Institute, the Alan Turing Institute, and Mozilla Foundation.

Individuals

Perhaps the most high-profile individual in attendance is Elon Musk. The CEO of Space X and Tesla has been vocal about his thoughts on the potential dangers of AI and is due to meet the UK Prime Minister Rishi Sunak. After the event, Mr Musk and Prime Minister Sunak will be “in conversation” on Thursday night (UK time) on X, formerly known as Twitter.

Also representing their corporations are Google DeepMind CEO Demis Hassabis, as well as Yann LeCun, Chief AI Scientist at Meta, and Sam Altman, CEO of OpenAI.

World-leading expert and vocal critic of AI, Geoffrey Hinton will also be in attendance. Dr Hinton is not only considered one of three “Godfathers of AI”, but indeed taught the other two at a doctorate and post-doctorate level.

What’s on the agenda of the AI Safety Summit?

As mentioned above, general AI and ‘Frontier AI’, split across the two days. The First day of the two-day summit is a series of discussions and roundtables for the attendees, mainly focusing on the topics of risk. Topics listed by the official program include:

Understanding Frontier AI Risks (roundtable discussions)

- Risks to Global Safety from Frontier AI Misuse. Focusing on safety risks including biosecurity and cybersecurity

- Risks from Unpredictable Advances in Frontier AI Capability. Focusing on ‘leaps’ around scaling, forecasting and development implications like Open Source.

- Risks from Loss of Control over Frontier AI. Focusing on control and oversight, monitoring and prevention of loss of control of advanced AI.

- Risks from the Integration of Frontier AI into Society. Focusing on Election disruption, crime, online safety, “exacerbating global inequalities” and steps the mitigate.

The second day of the Summit will focus on group discussions of attendees on steps to address risks and ensure AI is used as a “force for good” – with a potential agreement of international counterparts on next steps.

The results — Opinions, quotes, and proposed legislation

Michelle Donelan, UK Science and Tech Secretary, addressed the congregation on November first.

We can set up processes, to really delve into what the risks actually are, so that we can then put up the necessary guardrails, whilst embracing the technology.

Michelle Donelan, BBC News

Elon Musk, who co-founded OpenAI along with Sam Altman in 2015, was also in attendance. The Tesla and SpaceX executive has since gone on to found his own rival artificial intelligence firm, xAI. Despite being well positioned to make billions from this global technological surge, the Tesla chief warns the summit that AI poses “one of the biggest threats to humanity”.

I mean, for the first time, we have a situation where there’s something that is going to be far smarter than the smartest human. So, you know, we’re not stronger or faster than other creatures, but we are more intelligent. And here we are, for the first time really in human history, with something that’s going to be far more intelligent than us.

Elon Musk, AI Safety Summit

Similarly, Demis Hassabis, co-founder and managing director of Google DeepMind, doesn’t believe we should “move fast and break things”. Referencing the de-facto silicon valley mantra in discussion with BBC News coverage of the event, the AI tech CEO assures that philosophy has been “extraordinarily successful to build massive companies”, AI is just “too important a technology” to get right first time.

AI is too important a technology, I would say, too transformative a technology to do it in that way.

Demis Hassabis, referencing a “Move fast and break things” approach to AI development.

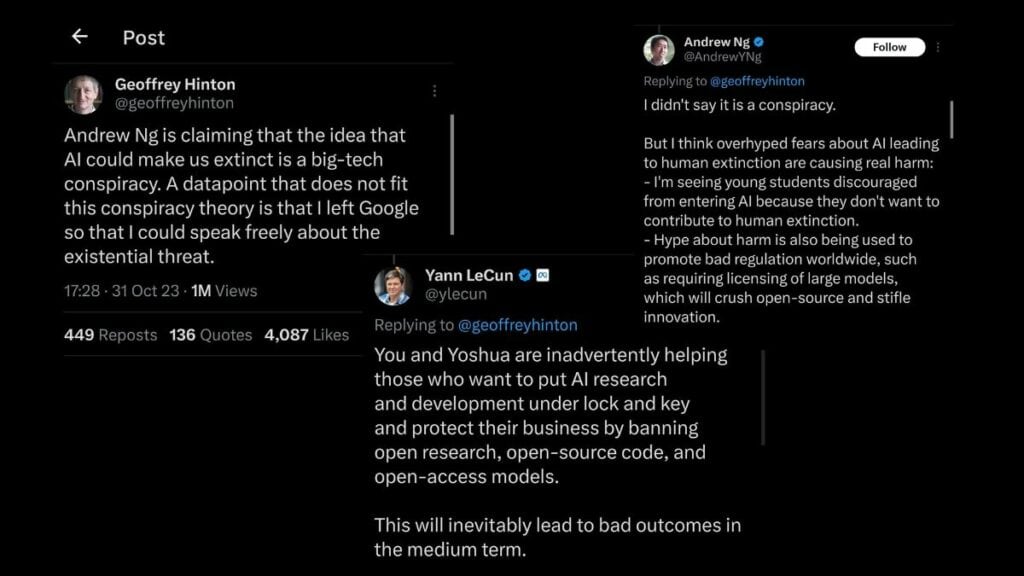

Geoffrey Hinton, the worlds foremost specialist on the subject of machine learning, left Google earlier this year citing a need to openly discuss his concerns. In response to comments at the summit from his peers Andrew Ng and Yann LeCun, Hinton suspects an ulterior motive to the seemingly altruistic compliance from big tech.

I suspect that Andrew Ng and Yann LeCun have missed the main reason why the big companies want regulations. Years ago the founder of a self-driving company told me that he liked safety regulations because if you satisfied them it reduced your legal liability for accidents.

Geoffrey Hinton, X (formerly Twitter)

Andrew Ng, co-founder of Google Brain and former Chief Scientist at Baidu, responded alongside Yann LeCun — both in opposition, assuring that innovation is essential.

I didn’t say it is a conspiracy.

But I think overhyped fears about AI leading to human extinction are causing real harm:

- I’m seeing young students discouraged from entering AI because they don’t want to contribute to human extinction.

- Hype about harm is also being used to promote bad regulation worldwide, such as requiring licensing of large models, which will crush open-source and stifle innovation.

I know you’re sincere in your concerns about AI and human extinction. I just respectfully disagree with you on extinction risk, and also think these arguments — sincere though they be in your case — do more harm than good.

Andrew Ng, in response to Geoffrey Hinton

You and Yoshua [Bengio] are inadvertently helping those who want to put AI research and development under lock and key and protect their business by banning open research, open-source code, and open-access models.

This will inevitably lead to bad outcomes in the medium term.

Yann LeCun, in response to Geoffrey Hinton