“This is why AMD can’t compete” The Nvidia Way author explains why the AI race isn’t close

Table of Contents

Nvidia has been leading the charge when it comes to AI, helping it transform into one of the largest companies in the world, mingling with the likes of Apple, Microsoft, and Amazon. Of course, Nvidia continues to maintain its consumer graphics card side of the business, recently releasing four cards in the RTX 50 series, most recently the RTX 5070.

However, it’s AI that brings in the big bucks, and Team Green has been investing heavily in infrastructure to maintain its lead. It doesn’t come as a surprise to see 200,000 Nvidia H100 GPUs powering Grok 3. Now, we can get further insight into Nvidia’s secrets to success after an interview with The Nvidia Way author Tae Kim, who has been in close contact with the company.

Nvidia is well ahead of the competition thanks to its AI servers

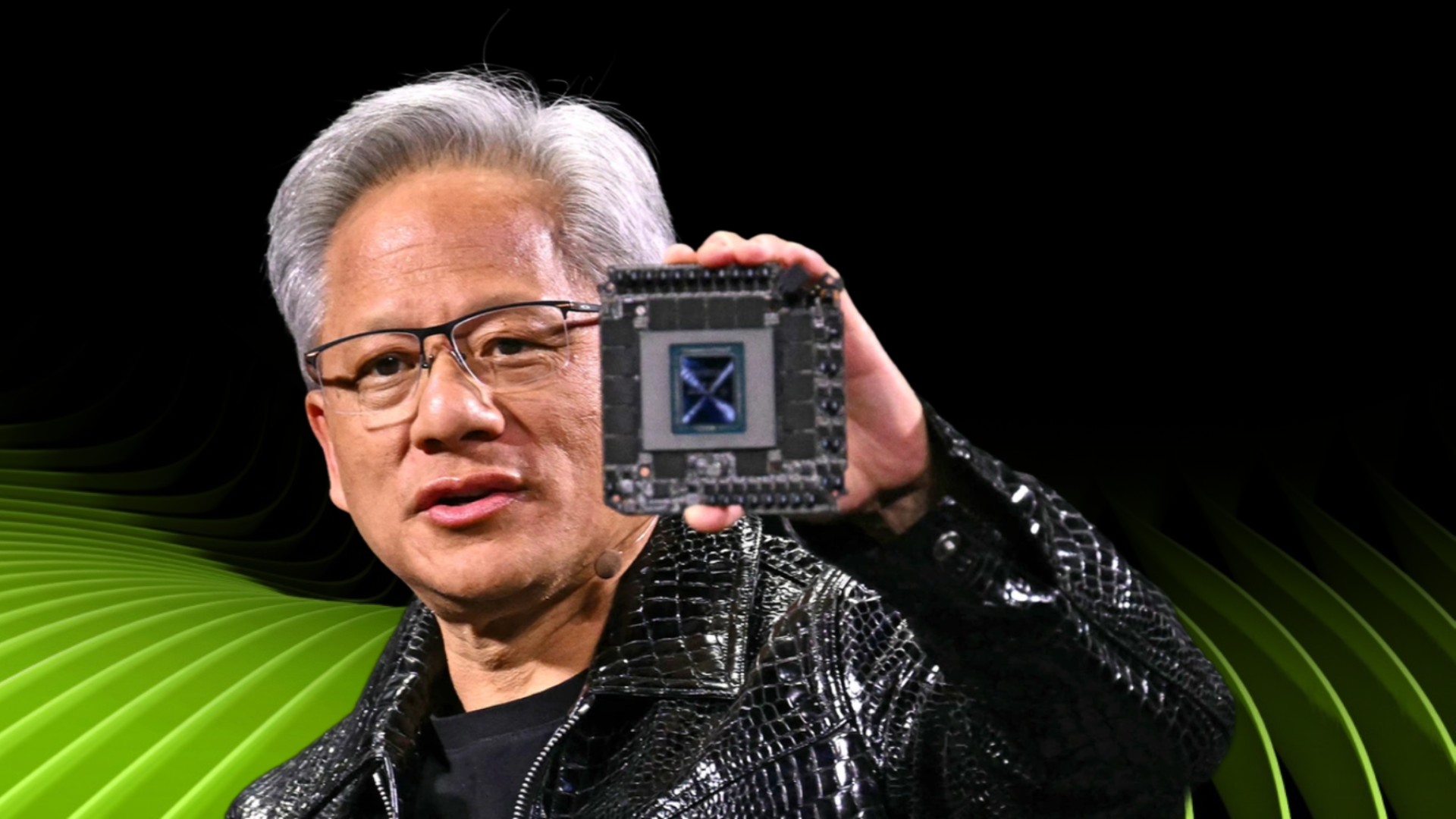

Large companies have been relying on Nvidia GPUs to train their AI models. Nvidia has even teamed up with DeepSeek, with CEO Jensen Huang recently praising the startup on its “world-class” open-source reasoning model. So, it’s safe to say that it is ahead of the competition right now. Kim says this is because Nvidia has the hardware and interconnectivity that its competitors don’t.

“In the past, even just a year ago, a typical AI server would have eight GPUs, it was a Hopper AI server. Now the current Blackwell AI server that’s shipping right now is 72 GPUs in the same space, it’s like one rack, it weights one and a half tons. This is why AMD can’t compete, they don’t have a 72-GPU AI server that has all the interconnects, all the networking, everything optimized.”

Tae Kim, author of The Nvidia Way: Jensen Huang and the Making of a Tech Giant

“You could stack a row of these [GPU racks] together and build out a 100,000 GPU cluster,” says Kim; this is what powered earlier versions of the Grok chatbot. Nvidia invested in GPU graphics beyond gaming and GPUs for AI earlier than its peers, and it has paid off in a big way. Kim continues, “the competitors don’t have CUDA, they don’t have all the optimized libraries and the bugs ironed out after 10 years of battle testing”. Additionally, Nvidia’s acquisition of Mellanox in 2019 was a massive boost to its networking division.

AMD has been slowly catching up on the AI front. On the gaming side, its new FSR 4 upscaler is now “much closer in quality” to DLSS 4, Nvidia’s latest version, with Team Red now making use of a neural network much like Nvidia. As for AI servers, though, Nvidia is still well in the lead with its Blackwell architecture – AMD’s alternative is its EPYC Processors.

If you want to learn more about The Nvidia Way, you can check the book (or audiobook) on Amazon. Kim also talked a bunch about Nvidia CEO Jensen Huang, who he calls “blunt and direct” – in an incredibly good way.