What GPU has the most CUDA cores?

Table of Contents

Nvidia’s CUDA (Compute Unified Device Architecture) cores are the processing units in Nvidia graphics processing units (GPUs) that are designed to handle parallel computing tasks. CUDA cores are specifically optimized for general-purpose computing tasks that can be processed in parallel, such as mathematical calculations and scientific simulations.

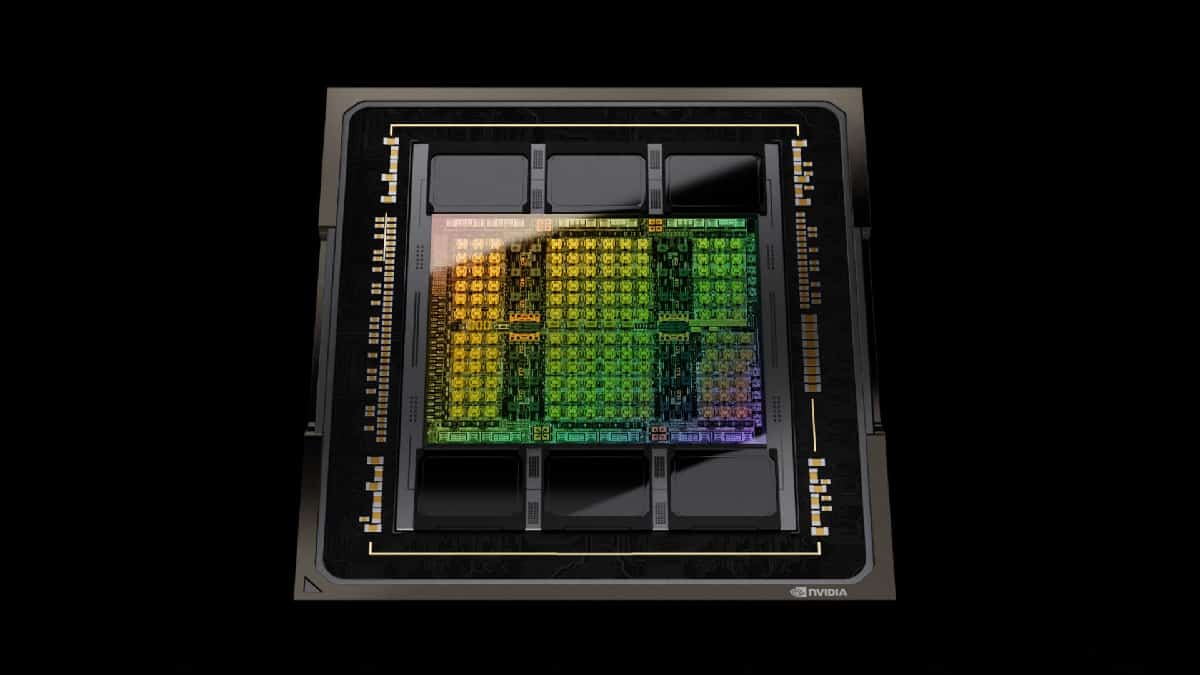

The GPU that has the most CUDA cores at the moment is the RTX 4090. The Nvidia RTX 4090 is the most powerful GPU currently available on the market, with a staggering 16,384 CUDA cores. The RTX 4090 is based on Nvidia’s Ada architecture, which features a number of improvements over the previous Ampere architecture, including a new streaming multiprocessor (SM) design and enhanced ray tracing and tensor core performance.

In addition to its massive number of CUDA cores, the RTX 4090 also features 328 Tensor Cores, which are designed to accelerate deep learning and AI workloads. It also has 82 RT Cores, which are specifically optimized for real-time ray tracing, making it well-suited for high-end gaming and professional applications such as 3D rendering and animation.

The RTX 4090 has a base clock speed of 1395 MHz and a boost clock speed of 1695 MHz, making it one of the fastest GPUs currently available. It also has a massive 24GB of GDDR6X memory, which provides high bandwidth and low latency for demanding workloads.

Due to its high performance and the massive number of CUDA cores, the RTX 4090 is primarily targeted at professional users and is well-suited for tasks such as scientific simulations, machine learning, and video editing. However, it’s also a highly capable gaming GPU, capable of delivering high frame rates and ultra-realistic graphics in the most demanding games on the market.

What are CUDA cores?

Each CUDA core is a small, programmable processing unit that can execute a large number of simple, parallel computations simultaneously. The more CUDA cores a GPU has, the more parallel computations it can perform simultaneously. This makes CUDA cores well-suited for tasks that require high computational power, such as machine learning, artificial intelligence, and scientific computing.

CUDA cores can be programmed using the CUDA programming language, which is a C-based language that includes extensions for parallel computing. The CUDA architecture also includes a set of libraries and tools that make it easier for developers to write and optimize programs for CUDA-enabled GPUs.