ChatGPT 4 Turbo and GPT-4 Turbo explained

Table of Contents

GPT-4 Turbo was announced at OpenAI’s DevDay, its first-ever developer conference, and it’s already shaking things up. Currently in research preview, this upgraded AI model will be one-third the cost to developers, despite a longer context window and more recent training data. There doesn’t appear to be any downside, as the new model will still support text-to-speech and DALL·E 3 image generation. Could this be the world’s best AI chatbot model ever? Let’s see what’s new in GPT-4 and ChatGPT 4 Turbo.

What is GPT-4 Turbo?

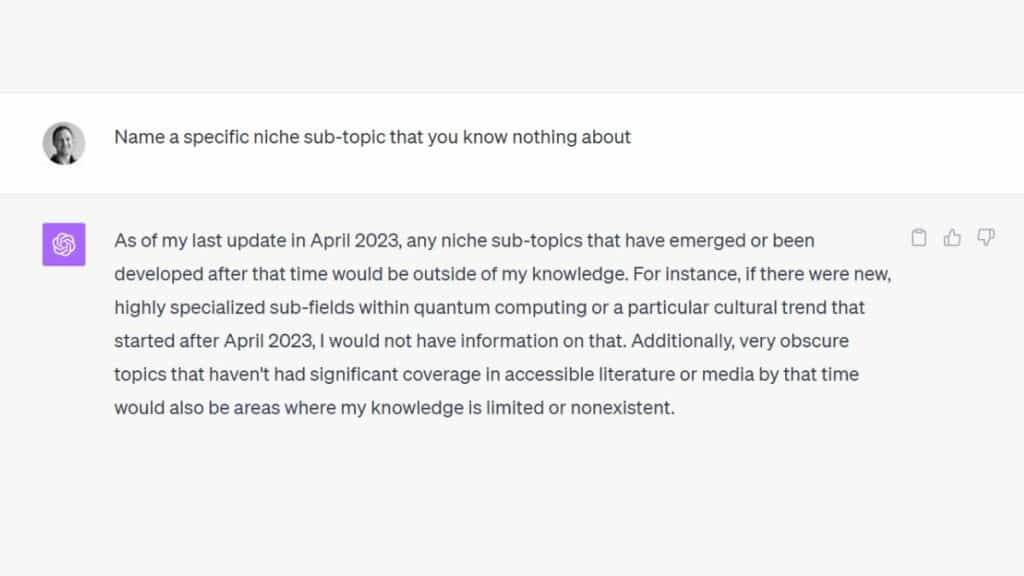

One of the key differences between GPT-4 (or GPT-4V) and GPT-4 Turbo is that the new model has had a major training data refresh, trained on information as recent as April 2023.

OpenAI CEO Sam Altman shared via X on Sunday November 12th, that a “new version of GPT-4 Turbo” is “now live in ChatGPT”. This new update will of course be a small iteration not deserving of a whole new model name (certainly not coming just days after the release of GPT-4 Turbo). However, Altman hopes you’ll still find it ” a lot better” than the previous version.

GPT-4 Turbo is more capable and has “knowledge of world events up to April 2023”. Boasting a 128k context window (128,000 tokens), it can “fit the equivalent of more than 300 pages of text in a single prompt”. This means that you can ask ChatGPT questions about an entire book, in plain text format, and it will address the entirety of the book wherever relevant.

Computer vision in GPT-4V and GPT-4 Turbo allows for “generating captions, analyzing real world images in detail, and reading documents with figures”. As an example, Danish mobile app BeMyEyes connects visually impaired people with those of clearer sight, to help guide them in performing daily tasks through their smartphone camera. This technology to help people who are blind or have low vision with daily tasks like identifying a product or navigating a store. Developers can “access this feature by using gpt-4-vision-preview in the API”.

It seems as though OpenAI plan to release new model upgrades in between each major foundational model release. This staggered release pattern of GPT-X, then GPT-X Turbo, followed by GPT-(X+1), and GPT-(X+1) Turbo is not dissimilar from the graphics card industry, where NVIDIA will release RTX-XXXX, then RTX-XXXX Ti at the halfway point. While the underlying architecture in both cases stays the same, some attributes can be made ‘bigger and better’ (like amount of training data or amount of RAM, respectively) to remain competitive before the next big thing is ready.

What is ChatGPT 4 Turbo?

There is no official release named ChatGPT 4 Turbo. This is an unofficial term that refers to ChatGPT with the GPT-4 Turbo model enabled.

Programming in JSON Mode with ChatGPT

GPT-4 Turbo requires less guidance and executes the generation of specific formats (e.g., “always respond in XML”) with greater confidence. As of the OpenAI DevDay developer conference, “JSON mode” has been introduced to ChatGPT, which ensures the model will respond with valid JSON. The new API parameter response_format “enables the model to constrain its output to generate a syntactically correct JSON object. JSON mode is useful for developers generating JSON in the Chat Completions API outside of function calling.”

Essential AI Tools

How to get GPT-4 Turbo

We released the first version of GPT-4 in March and made GPT-4 generally available to all developers in July. Today we're launching a preview of the next generation of this model, GPT-4 Turbo.

OpenAI, November 6th, 2023

GPT-4 Turbo is currently only available via the ChatGPT API. This API is also where you’ll find other models, some of which are only accessible via the API and not through the ChatGPT interface. The total list now stands at:

- GPT-4 Turbo

- gpt-4-1106-preview

- gpt-4-1106-vision-preview

- GPT-4

- gpt-4

- gpt-4-32k

- GPT-3.5 Turbo

- gpt-3.5-turbo-1106

- gpt-3.5-turbo-instruct

- DALL·E 3

- DALL·E 3 HD

- DALL·E 2

- Whisper

- TTS

- TTS HD

- DaVinci

- davinci-002

- Babbage

- babbage-002

- Ada

- ada v2

As you can see, with most foundation models there are incremental upgraded versions. The offshoots typical have pretty unattractive and unmarketable names, but developers don’t care about that; What really matters is what they can do.

How much does the GPT-4 Turbo API cost?

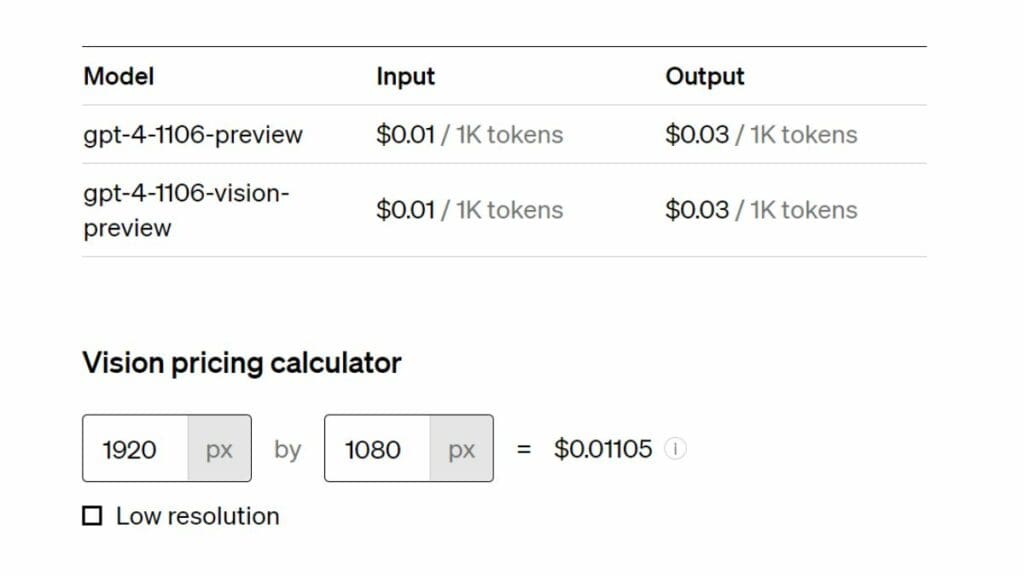

GPT-4 Turbo can “accept images as inputs in the Chat Completions API”, because it includes the same computer vision technology as prior model GPT-4V. However, because text-based and image-based paradigms are fundamentally different, the model has different pricing depending on what kind of output you generate.

Measured in tokens, GPT-4 Turbo costs $0.01 for input 1K tokens. That’s one cent for approximately 300 – 600 words. In addition, output tokens are priced at $0.03/1K. Roughly speaking, that’s one cent per 100 – 200 words.

In pixels, however, GPT-4 Turbo costs $0.01105 for a single 1080p image. That’s just over one cent for just over 2 million (2,073,600) pixels.

We plan to roll out vision support to the main GPT-4 Turbo model as part of its stable release. Pricing depends on the input image size. For instance, passing an image with 1080×1080 pixels to GPT-4 Turbo costs $0.00765.

OpenAI

Steves final thoughts

Of all the new features and upgrades we’ve seen from OpenAI DevDay, the new GPT-4 Turbo model will be the most significant to many. Between the new DALL-E 3 API, Assistants API, retrieval algorithms, TTS and speech recognition, the flagship model still holds the spotlight. We even saw an industry-first in Copyright Shield initiative which will protect users from legal claims relating to copyright infringement, as well as OpenAI’s new GPTs — custom AI agents that you can train with your very own data!

With the new GPT-4 Turbo model offering both text output at $0.01 per thousand tokens, and image output at $0.01105 for 1080p, I guess it’s true what they say.

An image is worth a thousand words.