NVIDIA HGX H200 Tensor Core GPU – World’s most powerful AI superchip

Table of Contents

NVIDIA is powering the generative AI race with its high-performance Grace Hopper superchips. Todays multi-billion-parameter LLM’s (large language models) use vast amounts of data, processed with high bandwidth memory such as the NVIDIA GH200 Grace Hopper superchip. Now, the firm has announced a new NVIDIA HGX H200 Tensor Core GPU

What is the NVIDIA HGX H200 Tensor Core GPU?

The new AI superchip is being touted as the new “The World's Most Powerful GPU“. That’s right, not just the most powerful for AI, but the most powerful full-stop!

NVIDIA’s HGX will accelerate inference times for the worlds most powerful LLM’s, such as OpenAI’s ChatGPT model GPT-4 Turbo, Google’s PaLM 2, and Meta’s open-source LLaMA 2. Today’s AI applications, particularly large language models, are trained on massive amounts of data. After all, it’s in the name!

The new NVIDIA H200 Tensor Core GPU “supercharges generative AI and high-performance computing (HPC) workloads with game-changing performance and memory capabilities.” All of this cutting-edge tech requires an equally cutting-edge bandwidth platform to handle never-before seen HPC applications (High-Performance Computing). NVIDIA CEO Jensen Huang’s latest hardware aims to solve this problem. “As the first GPU with HBM3e, the H200's larger and faster memory fuels the acceleration of generative AI and large language models (LLMs) while advancing scientific computing for HPC workloads.”

These performance leaps are come down to some very impressive advanced memory hardware. In fact, “the NVIDIA H200 is the first GPU to offer 141 gigabytes (GB) of HBM3e memory at 4.8 terabytes per second (TB/s) —that's nearly double the capacity of the NVIDIA H100 Tensor Core GPU with 1.4X more memory bandwidth. As a result, “the H200's larger and faster memory accelerates generative AI and LLMs, while advancing scientific computing for HPC workloads with better energy efficiency and lower total cost of ownership.”

Based on the same NVIDIA Hopper architecture we saw earlier this year, in the announcement of NVIDIA Grace CPUs. Its predecessor, the high-speed, high-bandwidth NVIDIA GH200 Grace Hopper superchip, was already the front-runner – making the firm its own biggest competitor. At this point, can anyone (or anyfirm) catch up?

Essential AI Tools

The AI servers industry – Can anyone compete with NVIDIA’s deep learning data center?

Ian Buck, NVIDIA's Vice President of Hyperscale and HPC is confident in the firms position.

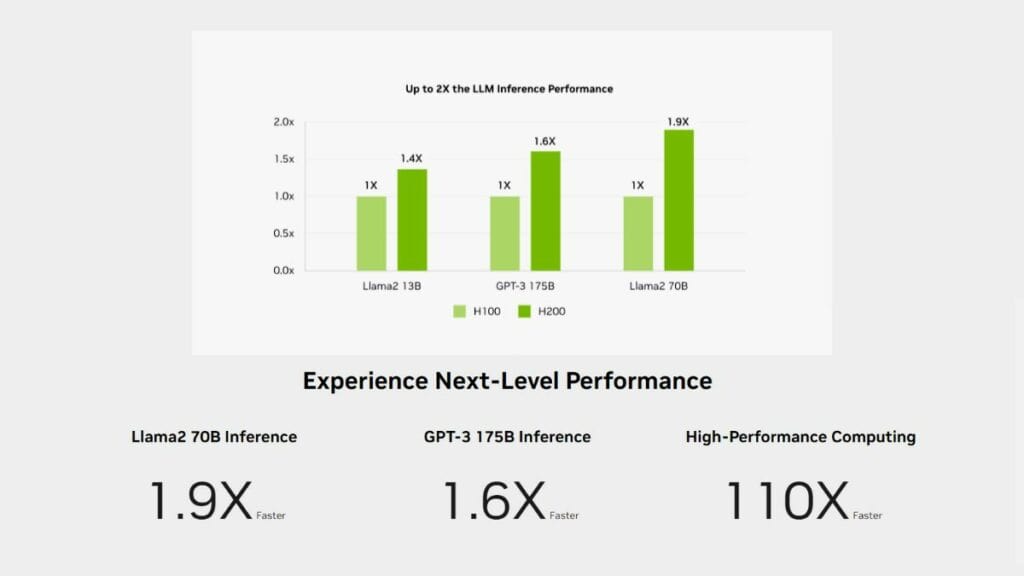

The H200 boosts inference speed by up to 2X compared to H100 GPUs when handling LLMs like Llama2.

NVIDIA

With all the worlds giant-scale HPC providers Amazon Web Services (AWS), Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure set to deploy the new H200, competing with NVIDIA (now a trillion-dollar company, due to artificial intelligence) seems unlikely.

NVIDIA in 2nd place . . . to NVIDIA

I recently called NVIDIA the ‘key hardware manufacturer of the AI industry’. As the world’s newest trillion-dollar company, thanks to the doubling down of efforts into artificial intelligence, that claim grows ever more true. When all the worlds tech giants are defaulting to the same hardware manufacturer, as Elon Musk did with the purchase of 10,000 GPUs to launch xAI, it’s clear you’re doing something right. While it’s not widely reported which GPU model they were, I’d safely assume them to be a sweet deal on GH200 chips direct from CEO Jen-Hsun “Jensen” Huang.

This all represents a steadfast hold on the industry, with NVIDIA’s server manufacturers network set to deploy the new hardware over the course of 2025. A network that includes ASUS, Dell Technologies, GIGABYTE, Hewlett Packard Enterprise, Lenovo, Supermicro and Wistron.

What’s the best GPU for AI?

The NVIDIA GHX H200 GPU is the new best hardware for AI and HPC applications. However, as most of us can’t afford the 5-figure price tag, any modern 4000 series NVIDIA GPU will perform well enough for hobbyist or semi-professional LLM training purposes.