Microsoft AI chip ‘Maia AI accelerator’ announced at Ignite conference

Table of Contents

The new Microsoft AI chip revealed at the Microsoft Ignite conference will “tailor everything ‘from silicon to service' to meet AI demand”. With MSFT data centers seeing a world-leading upgrade in the form of NVIDIA’s latest GHX H200 Tensor core GPUs, and a new proprietary AI-accelerator chip, the Microsoft Azure cloud computing platform becomes the one to beat.

Microsoft AI chip announced at the Microsoft Ignite conference

In fact, two new chips emerged from their secretive development dens this week: “the Microsoft Azure Maia AI Accelerator, optimized for artificial intelligence (AI) tasks and generative AI, and the Microsoft Azure Cobalt CPU, an Arm-based processor tailored to run general purpose compute workloads on the Microsoft Cloud.”

These two custom-designed chips put the tech giant in a stronger position in the AI race against NVIDIA and AMD, each with their own AI hardware supply chain.

Microsoft Azure takes the lead

The new proprietary Maia AI accelerator chip, in addition to upgraded GHX H200 servers at Microsoft data centers, will make the Azure cloud computing platform the most powerful of its kind anywhere in the world.

Scott Guthrie is the Executive Vice President of Microsoft’s cloud & AI group. At Microsoft Ignite on Wednesday November 15th, Guthrie revealed that “Microsoft is building the infrastructure to support AI innovation, and we are reimagining every aspect of our data centers to meet the needs of our customers.” He continued that “at the scale we operate, it's important for us to optimize and integrate every layer of the infrastructure stack to maximize performance, diversify our supply chain and give customers infrastructure choice.”

Essential AI Tools

The Microsoft Azure Maia AI Accelerator

Every since the release of OpenAI’s ChatGPT, tech firms around the world have been scrambling for resources, both human and technological, to keep pace in the AI race. Google has Bard with it’s respective PaLM 2 LLM (large language model). xAI now has Grok being Beta tested as we speak. Amazon is rumored to be secretly training the largest model yet, codenamed Olympus. Microsoft itself has Bing Chat, powered by the same GPT-4 LLM as ChatGPT, in addition to various other AI-powered services such as Microsoft Copilot and the Azure OpenAI service. The custom silicon, which will form an integral part of Azure's end-to-end AI architecture, ensures a comprehensive stance on the firms AI services.

“We have visibility into the entire stack, and silicon is just one of the ingredients.”

Rani Borkar, CVP for Azure Hardware Systems and Infrastructure

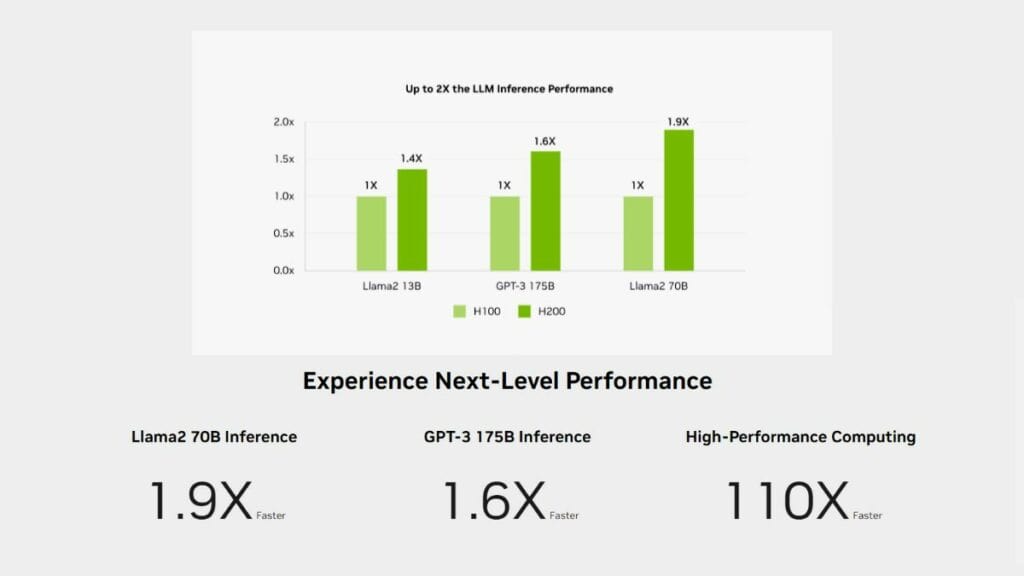

Microsoft also demonstrated “a preview of the new NC H100 v5 Virtual Machine Series built for NVIDIA H100 Tensor Core GPUs, offering greater performance, reliability and efficiency for mid-range AI training and generative AI inferencing. Microsoft will also add the latest NVIDIA H200 Tensor Core GPU to its fleet next year to support larger model inferencing with no increase in latency.”

The implementation of these cutting-edge AI chips will speed up inference for AI workloads by as much as 1.9x, even compared to the relatively recent H100 servers.