It’s no secret that Nvidia has been championing Generative AI over the past handful of years with DLSS (Deep Learning Super Sampling) its crown jewel in the gaming hardware space for over five years now. The technology utilizes a custom hand-tuned large-scale algorithm and hardware Tensor cores to downscale the native rendering resolution and output in a higher one. This makes 4K gaming possible from mid-range GPUs, and far higher framerates than what we’ve seen before.

However, over the last three GPU generations, it’s gone from an optimal framerate boost to an essential tool for playable framerates especially as software optimization has been more hit-and-miss in recent years. This software has been steadily improved from its DLSS 2 state, available up to Ampere (RTX 30 series), but came into its down with DLSS 3 with Frame Generation, which took things to the next level by using generative AI to fill in missing pixels when upscaling the picture, with a catch.

That’s because DLSS 3 is only able to be utilized by the most recent Nvidia GPU generation, the RTX 40 series, which essentially locked the next generation of the AI upscaling technology behind a paywall. It’s a double-edged sword because it means that while developers will have access to boost framerates through the AI upscaler, it essentially sets the precedent that you’ll need to upgrade every GPU generation to keep up, and drives a further nail into the coffin of native performance.

How Nvidia is using AI to replace native performance

More games are being made with DLSS in mind to bolster the numbers instead of through native performance and this is something that Nvidia itself confidently runs with. We can evidence this with the Alan Wake II DLSS 3.5 reveal trailer that shows the game running at about 30fps natively (which is poor) before before the tech is switched on boosting the figures all the way up to 112fps average. While there’s minimal visual degradation, it shows Team Green’s confidence in its tech, and less worry about how its cards perform from a raw power point of view.

Unlike with DLSS 3.5 Ray Reconstruction, 3.7 is more about improving the overall quality of the picture to get closer to the native quality. We can see the differences in Cyberpunk 2077 comparing versions through 3.5 vs 3.7 (via Frozburn via YouTube). In the side by side analysis, the new preset version reveals less ghosting on distant moving objects and more definition on palm trees, buildings, street signs, and billboards. It’s a similar story when the car’s in motion as the reflections and shadows are sharper.

While this technology is undoubtedly impressive, it marks a shift away from prioritising hardware to run games natively and more so on a reliance on AI in order to provide playable framerates which I have mixed feelings about. On one hand, I love the tech and think that it’s great as a way of bolstering up the high end cards to become even more impressive, but it feels more like a crutch for the mid-range and lower-powered GPUs.

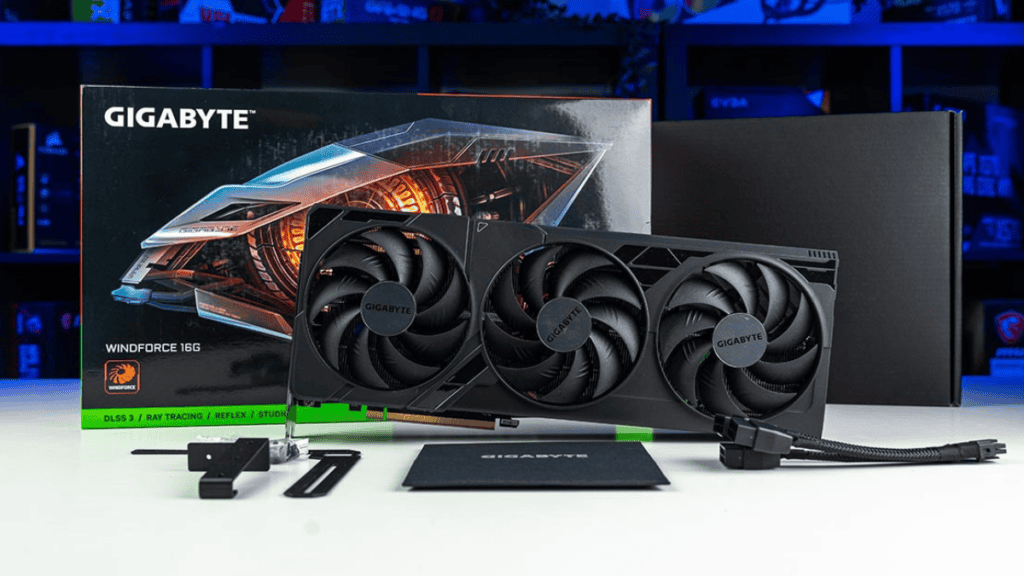

The RTX 4060 is a good card for 1080p and light application in 1440p for its $299 price point. However, it really needs DLSS 3’s Frame Generation in order to fully flourish. The problem then, however, is that you’ll essentially be downsampling from 1080p down to 720p and then back up, which can have mixed results. Upscaling 1440p from 1080p native is more hit and miss but the only real solution to have playable framerates. It’s here where the limitations start to show, you can find out more in our dedicated RTX 4060 review.

DLSS 4 could be locked behind RTX 50 series

Given the track record of Nvidia with DLSS 3 being exclusively available to the RTX 40 series, it sets the stage for this trend to continue with the RTX 50 series to run DLSS 4 and the potential next generation of Frame Generation 2.0. This isn’t necessarily going to happen, as the likes of DLSS 3.5 Ray Reconstruction and DLSS 3.7 Quality update have been applied to all RTX cards. However, Frame Generation, the biggest of these technologies, cannot be done on older cards.

Then we get onto the fact that the RTX 40 series marked a significant price increase over its predecessors which was met with a mixed reception from us and other critics. The key example was with the jump from RTX 3080 ($699) to RTX 4080 ($1,199) which is a massive increase just to have an 80-class card that can do DLSS 3 Frame Generation. We could, therefore, see the RTX 5080 priced even higher, unless Team Green does learns from its mistakes like with the RTX 4080 Super ($999).

Modern gaming without DLSS

It’s certainly possible to game without the usage of Nvidia DLSS enabled with playable framerates but it’s far more of a case-by-case basis when the software is concerned, with only the bleeding edge of hardware able to brute force its way through in higher resolutions. In our testing here at PC Guide we’ve noticed this trend firsthand as we’ve been reviewing all the graphics cards from not only Nvidia but also AMD and Intel, too, the latter two options with their answer to Nvidia’s upscaling tech; FSR and XeSS respectively.

Our testing reveals the major weakness of today’s current crop of graphics cards when doing 4K natively. Let’s took at the RTX 4080 Super which is a premium GPU but can’t quite do Cyberpunk 2077 maxed out in 2160p at 60fps even without ray tracing enabled. That extends to the RX 7900 XTX as well, which is available for the same price, which will able to do 70fps in Cyberpunk 2077, couldn’t do Fortnite maxed out in 4K at 60fps, showing that native performance isn’t consistent across the board.

What does the future of DLSS mean for native performance?

Simply put, considering technologies like DLSS are so prevalent as over 500 games support it (via Nvidia) it doesn’t look good for the native performance of tommorrow’s games. Top-end titles are beholden to AI upscaling in order to achieve playable framerates and that’s only going to become more apparent in the next few years, as Intel and AMD have thrown their hats into the ring. Essentially, this could mean hardware manufacturers putting more emphasis on Tensor cores and AI accelerators than pushing the boundaries on ray tracing cores, and CUDA cores / Stream Processors / Xe cores.